By Sarah Barton

Consider Fleetwood Mac’s “The Chain”. Initially, it presents a moderate tempo, accompanied by a consistent guitar and drum beat. However, a shift occurs as the vocals enter after the first bar. Infused with a hint of anger and resentment, the energy escalates with a declaration of heartbreak: “If you don’t love me now, then you’ll never love me again.” Subsequently, the intensity fluctuates as the beat and instrumentation momentarily recede before surging again; a guitar solo rapidly ensues, bringing with it a deeper pitch, accompanied by swift strums that intensify. This crescendo of tension ultimately guides the listener to the familiar bridge, completing the song’s focus on aching bitterness.

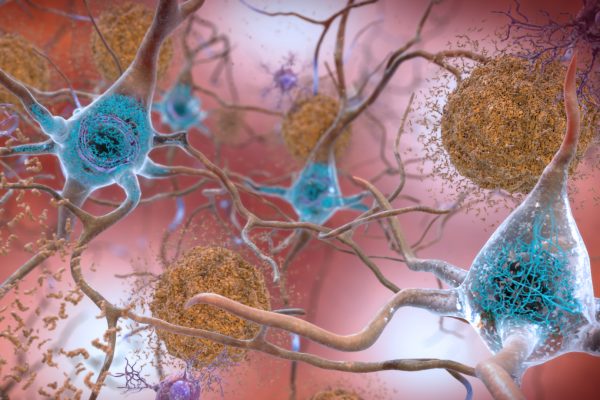

Researchers Edward Chang and Frederic Theunissen at the University of California, San Francisco (UCSF) were curious about more than just why The Chain is so beloved–they questioned whether specific features of melody are encoded by separate neural brain populations. Their study aimed to demonstrate whether music selectivity reflects melodic expectations of joint encoding in music and speech.

Previous research completed in the field of neural encoding has found areas within the brain that are selective for music. For example, the Superior Temporal Gyrus (STG) is preferentially responsible for responding to music over other sounds. The team of UCSF researchers analyzed pitch change and pitch sensitivity variations in response to melody by recording neural activity through electrocorticography (ECoG), which entails placing electrodes directly on the brain’s surface after opening the scalp. This method of neural recording is exact for capturing electrical activity in the cortex–its acute ability to focus on understanding auditory input is spatially represented throughout the brain.

Eight participants undergoing treatment for intractable epilepsy were chosen to be in the study, which consisted of electrode placement over regions surrounding the Sylvian fissure (an area responsible for language near the lateral sulcus of the brain). During the experiment, the participants were asked to listen to natural music and speech stimuli while researchers took ECoG recordings.

It should be noted that the main music stimuli consisted of 214 musical phrases from instrumental recordings that varied in style and were designed to contain all three pitch-related dimensions and Western music styles, lasting four minutes within each of the 5-time blocks. In contrast, two other participants listened to the music control stimuli or melodic speech through the speakers of a Windows laptop. The speech stimuli consisted of 499 English sentences across four listening blocks and were presented in the same fashion as the melody stimuli. The melodic speech stimuli were created by changing the pitch of speech stimuli, and the melodic speech control stimuli were created by manipulating the pitch of TIMIT tokens (a collection of speech recordings of American English dialects).

In order to quantify the data, they used temporal receptive field (TRF) modeling to mathematically analyze neural activity for all aspects of music perception at one electrode, incorporating stimulus spectrograms and temporal landmarks into their observations. They quantified the results using MATLAB and Python coding.

The results were shocking: neurons recorded in the STG encoded all three features necessary for phoneme-based expectations, aka, our brain’s ability to predict sounds based off of our knowledge of spoken language. In contrast, 80% of the other electrode recordings of neurons outside the STG recorded individual features, essentially meaning that the STG itself had all the necessary precursors to hear all sounds, whereas those outside of the STG were able to hear only certain individual aspects of sound. Despite the slight correlation between the three features, they still differ significantly in the human auditory cortex. Contrastingly, there was no correlation between selectivity and pitch or pitch change encoding.

Emerging technologies and scientific approaches are now focusing on specific brain regions to address various neural functions. By utilizing Transcranial Magnetic Stimulation (TMS) on the Superior Temporal Gyrus (STG)–alongside other targeted areas–auditory processing related to memory, hearing, cognition, or vision may be enhanced, potentially leading to hypotheses for more effective treatments for deafness.

When considering future clinical trials, more extensive research and larger sample sizes are needed to understand the full scope of these findings–but the groundwork has been laid, and the future of auditory stimulation is teeming with new possibilities.